Recognize image cards

This case uses the eye-to-hand mode, uses the camera, loads the model data trained by Tensorflow through OpenCV, recognizes the image block and locates the position of the image block in the video. Through the relevant points, the space coordinate position of the block relative to the robot arm is calculated. Set up a set of related actions for the robot arm, and put the identified object into the bucket. In the following chapters, the code implementation process of the entire case will be introduced in detail.

1 program compilation

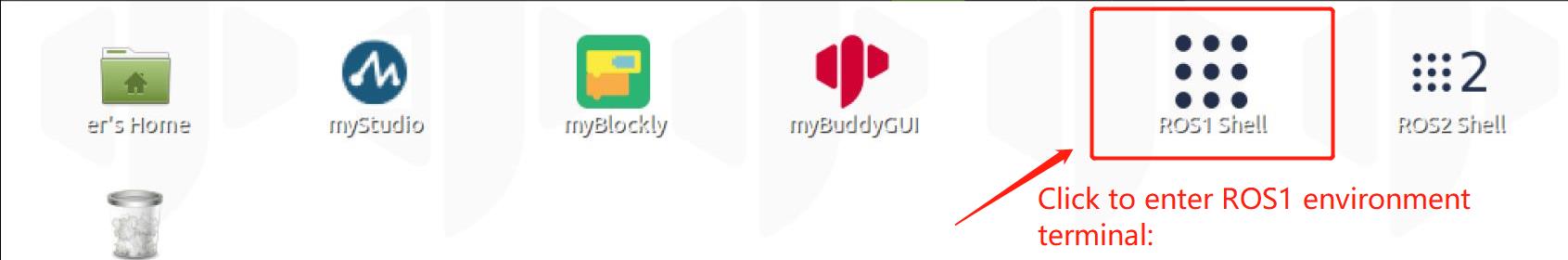

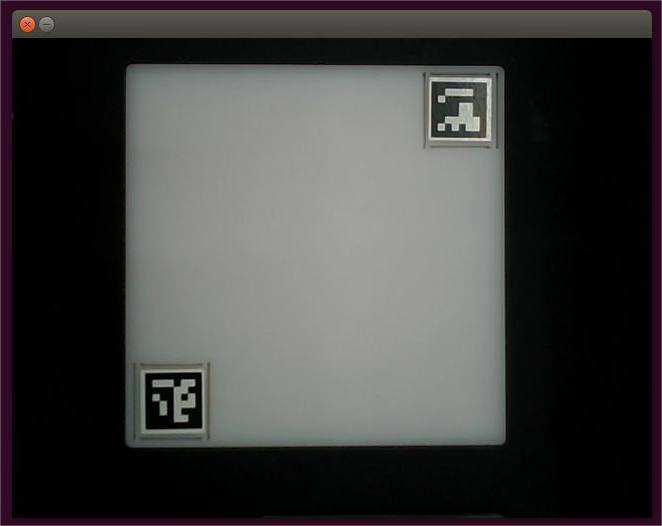

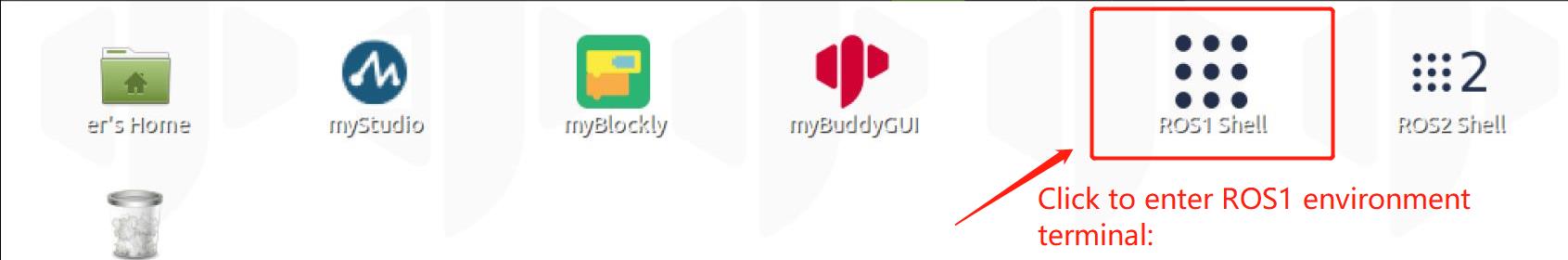

Click the ROS1 Shell icon on the desktop or the corresponding icon in the lower bar of the desktop to open the ROS1 environment terminal:

Then enter the following command:

cd ~/catkin_ws # Go to the src folder in the workspace

catkin_make # Build the code in the workspace

source devel/setup.bash # Add environment variables

2 Camera Adjustment

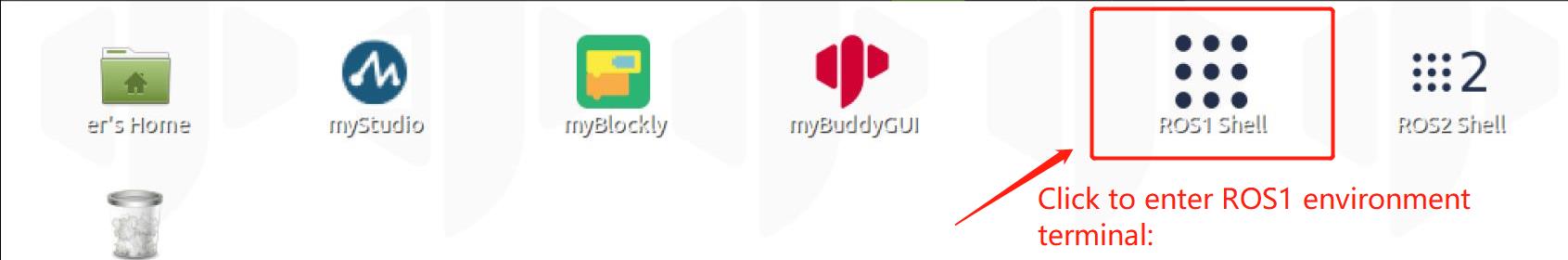

First, you need to use python to run OpenVideo.py under the aikit_V2 package. If the enabled camera is a computer camera, cap_num needs to be modified. For details, please refer to: Precautions. Make sure that the camera completely covers the entire recognition area, and the recognition area is square in the video, as shown in the figure below. If the recognition area does not meet the requirements in the video, the camera position needs to be adjusted.

Click the

ROS1 Shellicon on the desktop or the corresponding icon in the lower bar of the desktop to open the ROS1 environment terminal, and enter the following command to enter the target folder:

cd ~/catkin_ws/src/mycobot_ros/mycobot_ai/aikit_280_pi/

- Enter the following command to open the camera for adjustment

python scripts/OpenVideo.py

2 Add new image

- Open a console terminal (shortcut key Ctrl+Alt+T), enter the target folder

cd ~/catkin_ws/src/mycobot_ros/mycobot_ai/aikit_280_pi/

- Enter the following command to start the process of adding images.

python scripts/add_img.py

Operate according to the prompts entered by the terminal, and capture the image in the second image box that pops up.

After the image capture area is completed, press the Enter key, and according to the terminal prompt, enter a number (1~4) to save to the folder corresponding to the image, and press the Enter key to save it to the corresponding folder.

3 Case Reproduction

Raspberry Pi version:

Click the

ROS1 Shellicon on the desktop or the corresponding icon in the lower bar of the desktop to open the ROS1 environment terminal, and enter the following command to start thelaunchfile:

roslaunch aikit_280_pi vision_pi.launch

- Open another ROS1 environment terminal (the opening method is the same as above), and enter the following command to enter the target folder:

cd ~/catkin_ws/src/mycobot_ros/mycobot_ai/aikit_280_pi/

- Then enter the following command to start the image recognition program.

python scripts/aikit_img.py

- When the command terminal shows ok and the camera window can be opened normally, it means that the program runs successfully. At this time, the identifiable objects can be placed in the recognition area for recognition and capture.

Precautions

- When the camera does not automatically frame the recognition area correctly, you need to close the program, adjust the position of the camera, and move the camera to the left or right.

- If the command terminal does not display ok, and the picture cannot be recognized, you need to move the camera slightly backward or forward, and the program can run normally when the command terminal displays ok.

- Since the recognition method has been changed, please make sure that there is a folder for storing pictures and pictures that need to be recognized under the code path. If not, you can use

python scripts/add_img.pyto add pictures. - OpenCV color recognition will be affected by the environment, and the recognition effect will be greatly reduced in a darker environment.